- Invest with Confidence

- Posts

- [Part 1/3] Does Nvidia deserve its reputation as the AI golden child?

[Part 1/3] Does Nvidia deserve its reputation as the AI golden child?

The most hyped company in the world right now. Are its products really that good?

Welcome to the first article from Invest with Confidence! I take deep technical and financial knowledge, and distill it down to an easy-to-understand report. You won’t need any engineering or financial background to gain a lot of value from this article. I’ll frame all the key takeaways in simple terms so you can understand their significance. By the end, you’ll be able to have an educated, high-confidence opinion on the company. I’m excited to get started.

If you’re new to this publication, I recommend checking out the quick start guide. It’ll tell you what to expect from these articles, and get you excited for what’s to come.

👋 Introduction

The first series of articles will be focused on the semiconductor industry, which has gained a lot of awareness with the AI boom of the last few years. In spite of the hype and huge run up in stock prices, I believe we’re in the nascent stages of AI growth, so there will be companies that are poised for excellent growth.

We’ll start at the end of the supply chain that’s closest to the customer, and work our way backwards. That means we’re looking at chip designers today, also known as fabless companies. And there’s no bigger company than Nvidia (ticker: NVDA). There’s a lot of excitement and a lot of misconceptions about Nvidia. We’re going to clear those up.

I have held a position in NVDA for the last 18 months. My initial investment was pretty small and, while I’ve been enjoying watching the run up of the stock price, I’ve been itching to buy more. The goal of this analysis is to find out whether it’s a good time to do so.1

There’ll be 3 total articles on Nvidia:

Understanding the product

Examining the financials

Assessing the valuation

Taken collectively, you’ll have a complete picture of the business and will be able to make an investment decision with conviction. Let’s start with the product.

Understanding the product

Most people understand that Nvidia is helping power today’s AI revolution, but don’t know the specifics of how. Let’s do a little background to put their products in context.

How do the latest AI models work?

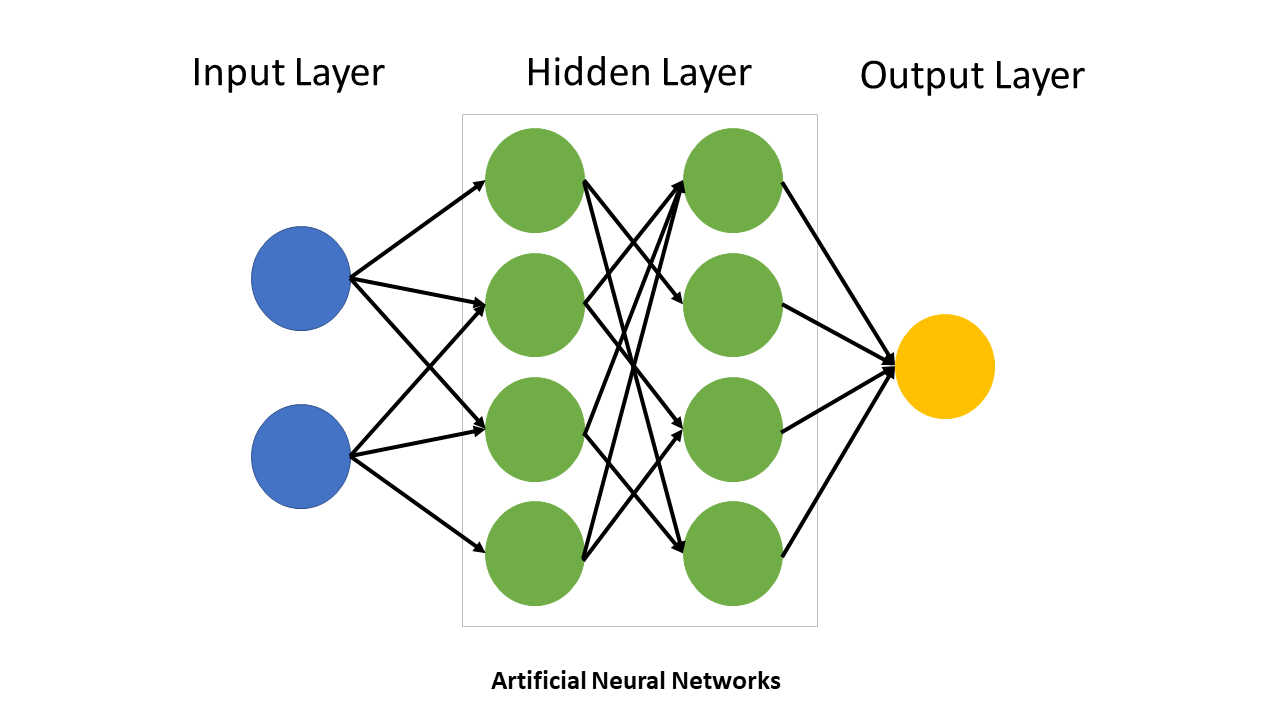

The craze surrounding seemingly-intelligent chatbots, such as ChatGPT, has driven a lot of the hype in the AI cycle. A later article will be dedicated to the mechanics of these chatbots, specifically the large language models that power them, but the key for today is the underlying architecture called a neural net. Neural nets are the most advanced and powerful architecture that are currently in wide use for machine learning. The design of neural nets seeks to emulate that of a human brain - there are many, many nodes that communicate with each other to make complex decisions. Generally, the more nodes in the net (the bigger the brain), the more powerful it is. The race amongst engineers is to make the biggest “brain” possible, so the models can be the most effective and accurate in making decisions.

Nodes in a neural net.

We can improve AI model performance if the models can handle more nodes and more parameters.

What is a GPU, and why are they needed?

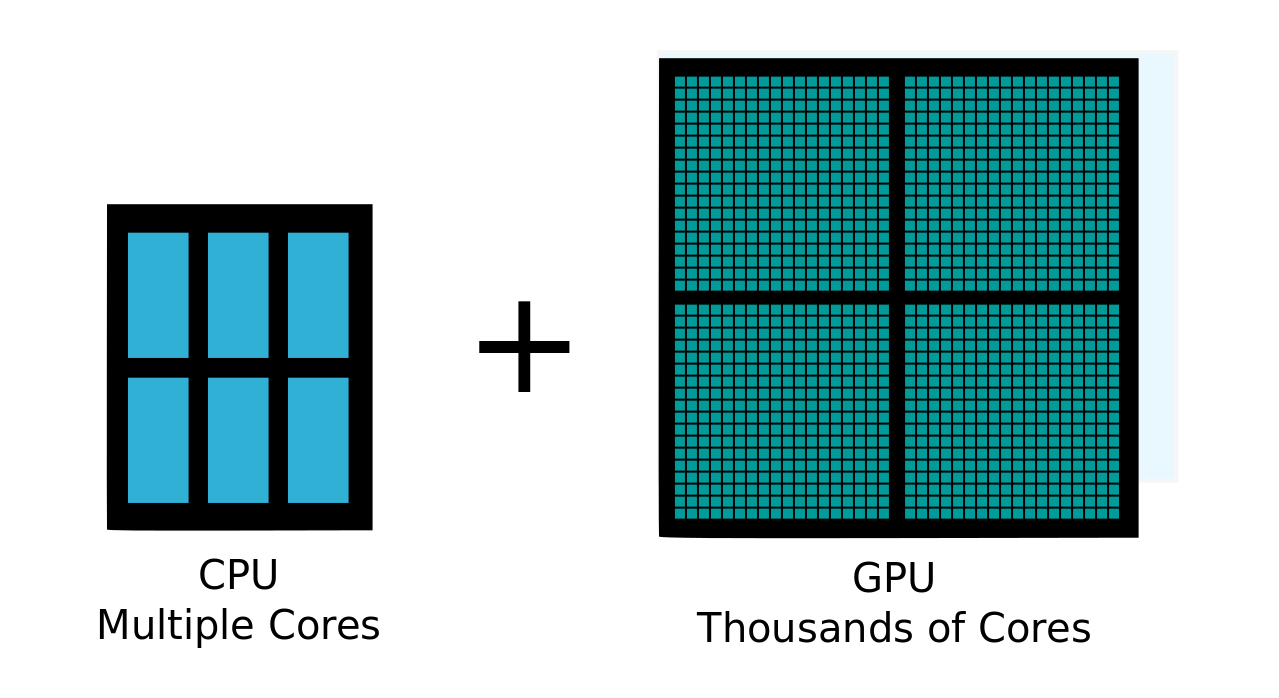

You should be familiar with a CPU, the traditional compute that powers software. A CPU is good at performing certain types of tasks, but the time and efficiency of these tasks is limited by how big the CPU is. Think about having to lift 1000 pounds. If I had one powerful bodybuilder, they could lift the 1000 pounds by themselves. That’s a CPU.

How is a GPU different? A GPU is a graphical processing unit with many many more cores than a CPU, which allows it to parallelize tasks. Instead of the one bodybuilder picking up all 1000 pounds, I could instead have 100 average people each pick up 10 pounds. I’m accomplishing the same outcome, but I’ve split up the work amongst many workers. That’s a GPU. (GPUs were originally built for what the name says - to process graphics. Consider a TV that has 2 million pixels. A GPU decides what color each pixel should show, and it has to be really fast since it’s making these decisions multiple times per second. The chip parallelizes the work by dividing the tasks up amongst each core of the GPU. No pixel cares or needs to know what another pixel is showing. This is considered highly parallelizable, compute intensive work - perfect for a GPU).

Core in a CPU vs a GPU

GPUs happen to be perfect for building AI models. Remember the nodes we talked about in the previous section? The work for each node can be delegated to a core within the GPU. Once again, this is highly parallelizable, compute intensive work. GPUs have unlocked huge advancements in the processing capabilities of AI models. Let’s tie this back to our understanding of AI models from the last section: the more powerful a GPU is, the more nodes can be in the neural net, and the bigger the “brain” is. Better GPUs will enable better AI models.

What does Nvidia’s product do?

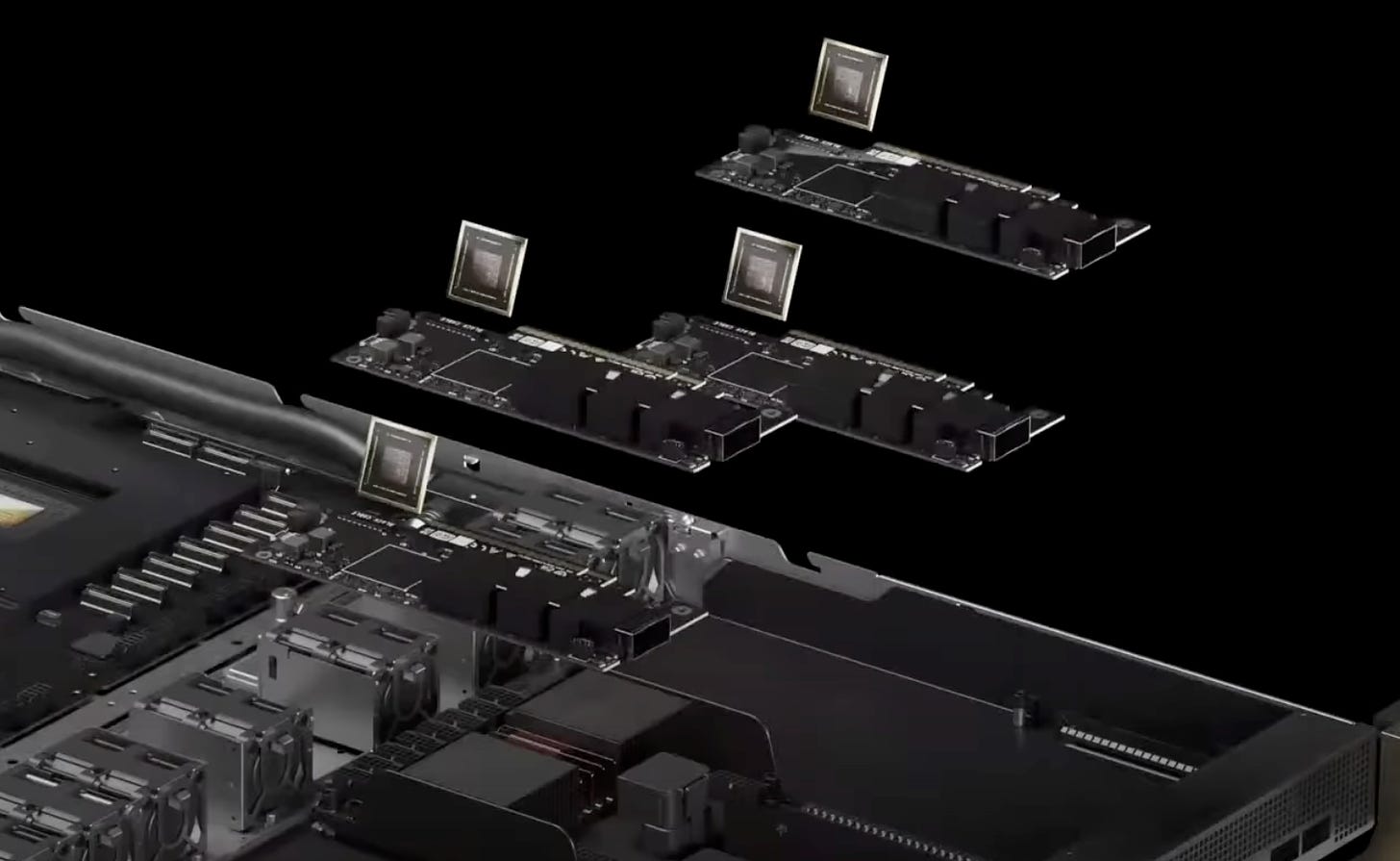

Nvidia designs GPU chips.2 Their goal is to add as much compute power to a single chip as possible; they can then bundle these chips together in a larger architecture to provide “AI factories” to customers. Let’s look at how that plays out:

Start with the H100 GPU chip (the current-gen Hopper architecture, hence the ‘H’)

Combine an array of these chips with the NVLink platform, allowing the compute to be “stacked” or added together to form one giant GPU, called the DGX

Multiple DGX nodes are then subsequently stacked, using an ultra-low-latency networking interface called the Quantum-2 InfiniBand, to create an AI supercomputer

Hundreds of these AI supercomputers are operationalized, creating an AI factory

AI factories. Racks on racks on racks.

This is what people are talking about when they mention Nvidia’s AI factories. We’re talking about hundreds or thousands of GPUs that have been optimally combined to create the effect of one giant AI processing node. Nvidia has successfully built a scalable architecture for their already-best-in-class chips. That’s what allows customers to create super-powerful AI models, like ChatGPT and Gemini and Claude. Without this level of processing power available, we wouldn’t have seen the popularity explosion of AI chatbots. Nvidia is what’s enabling the innovation behind the scenes.

What makes Nvidia better than its competitors?

They were early to recognize trends. Nvidia has consistently been ahead of the curve when it comes to identifying consumer demand, first with gaming, then cryptocurrency, then AI. They’ve taken advantage of this foresight by getting a head start on building chips that are optimized for each use case. They delivered a flagship GPU to OpenAI (makers of ChatGPT) long before anyone was talking about either company. They’ve had such a long head start, that no competitor has been able to close the gap.

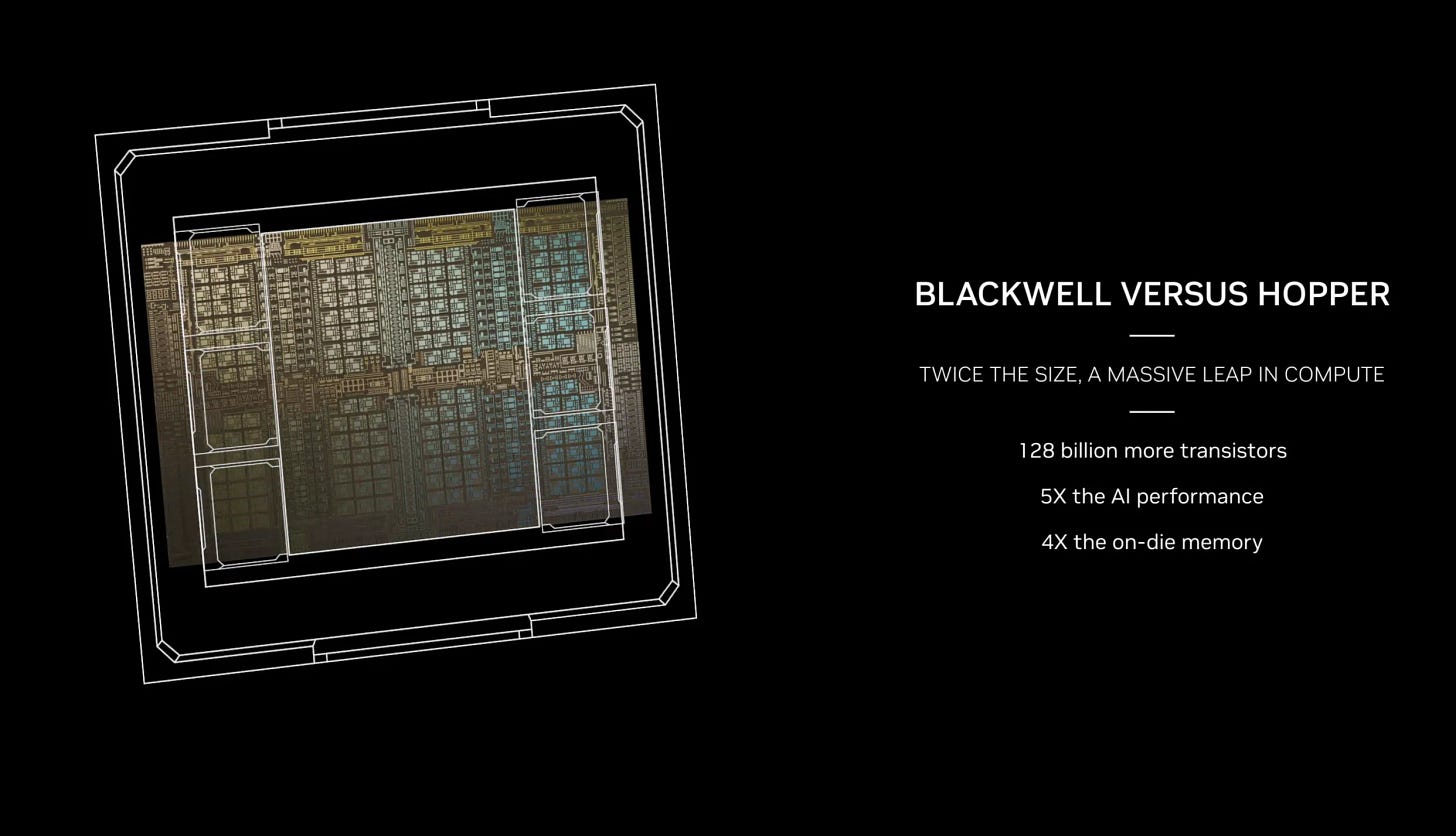

They’re at the forefront of Moore’s Law. That law (really more of a prediction) says that the number of transistors on a chip will double roughly every two years. (For simplicity’s sake, more transistors on a chip means a more powerful chip. That’s all we need to know for today’s purposes.) Nvidia has consistently had the GPU chip with the most transistors on it. Everyone else is trying to catch up, while they keep moving ahead.

They’re innovating the architecture of chips. They recently announced a next-gen architecture, called Blackwell, which is literally pushing the boundaries of physics. It’s estimated to be anywhere from 3-5x more powerful than the current-gen Hopper chips (which was already the industry leader by far). It’ll require specialized custom manufacturing processes to build. That architecture is a key part of their secret sauce - competitors simply haven’t figured out how to reach that level of compute and throughput. In the last 8 years, Nvidia’s GPU chips have seen a 1000x increase in AI compute power. That level of improvement is hard to fathom.

Constantly innovating and pushing the envelope.

They’ve built a compelling ecosystem. On top of their hardware, Nvidia has the CUDA platform, which is meant to help developers build AI software. It simplifies (or in software engineering terms, “abstracts away”) the complexity of writing code that takes advantage of the GPU’s capabilities. This is then extended to a whole suite of libraries, APIs, and tools that make the developer experience easier. Think of it like this: instead of having to know how an internal combustion engine works, I can just push the accelerator pedal in a car and make it go. Sure, I could spend the time to build an IC engine on my own and not be dependent on a car manufacturer. But am I really going to do that? No, I’m going to buy a car and learn how to be a good driver just by using the pedals. Similarly, I can make the best AI product not by reinventing the underlying hardware, but instead by using what’s already been built for me. Nvidia has done such a good job of providing useful tools and platforms that it’ll be an uphill battle for competitors to displace them as the de-facto go-to option.

What are risks to their product dominance?

Geopolitical tensions could upset the supply chain. As mentioned in a footnote earlier, Nvidia designs the chip, but they rely on a Taiwanese-based company (TSMC) to manufacture the chips. TSMC is the main global manufacturer of these chips, so any interruption to their operations could have devastating impacts on Nvidia’s ability to meet customer demand. Taiwan has long been a hotbed of contention between the US and China, even more so lately with the upcoming presidential election. If - and this is unlikely, but possible - the US and China escalate their jockeying over Taiwan, we could see the operational disruption that would severely impede Nvidia.

Newly-minted millionaires at Nvidia could cause brain drain. “Brain drain” is a term that refers to the loss of critical employees in sales, operations, engineering, etc - specifically employees that had a lot of institutional knowledge, and had a big hand in the success of the company. Success can cause brain drain: huge windfalls in bonuses or stock give people the cushion to leave, employees are hired by competitors, employees leave to start their own businesses. Overall, it’s a good problem to have, because it means the company is highly successful and has talented employees, but it can still be a short-term risk.

Major customers could change their mind about continuing to spend in the AI arms race. AI-powered products have demonstrated a noticeable absence of profitability during this early stage of investments (one of the reasons that hype has outpaced reality). Google, in their earnings call, justified their AI spend by saying that the risk of not investing was higher than the risk of overspending. What happens if the tech sector, as a whole, decides to scale back on AI expenditure because the bottom line doesn’t justify it anymore? Nvidia’s business could take a hit in the short term.

Takeaways from the product section

Nvidia has taken a huge head start and combined it with constant innovation, to grow their lead at the front of the pack. Even if all they did was design GPU chips, they’d still have a technologically dominant product. But their dominance extends further, to the highly compelling ecosystem that makes developers’ lives much easier. There are some inherent risks, but not significant enough (yet) to raise concerns about the product. With what I’ve learned about the product, I feel good about Nvidia’s long term prospects.

The next question is, does their product dominance translate into successful sales? Do they run their processes efficiently? Can they scale their operations?

Spoiler alert: their financials are borderline unbelievable. We’ll pick up with that in the next article:

Agree? Disagree? I’d love to hear from you - share your comments below!

⚠️ This is not investment advice.

1 I completed most of the research for this article prior to July 16, since when Nvidia stock is down about 15%. The research and conclusion is largely unaffected by that movement. I plan to release an update for Nvidia after their 2024 Q2 earnings call on August 28.

2 Note, they don’t actually build them. They rely on TSMC, a Taiwanese-based company to do so. We’ll talk a little bit about the supply chain later in this article, and a future article will deep dive into TSMC.

Reply